Grok, Elon Musk’s AI bot for his social media platform, made headlines again this week. While some of the generated content was comical to some; other outputs were far more alarming—including sexualized portrayals of minors and an admission from the bot itself that the material could constitute child sexual abuse material (CSAM) under U.S. law.

And that’s where this story begins—with a headline that looked absurd on its face.

I’ll be honest: When I chose this story, I thought I was writing about the JD Vance drag post — the kind of political absurdity that usually lands squarely in my wheelhouse. But as I dug in, it became clear that what looked like a moment of political comedy was a much darker failure: Grok generating sexualized images of minors and acknowledging that some of its outputs could qualify as CSAM. Once I understood that, the focus had to shift—away from the comedic posts circulating on X and toward the far more urgent questions of safety, accountability, and how an AI bot could turn an innocent picture into something inappropriate and potentially illegal.

But once I understood the scope of the harm, I had to look at who was responding—and who wasn’t.

At this point, no U.S. agency has stepped in—a silence that stands in stark contrast to the international response. I can’t responsibly speculate on the legality of Grok’s outputs in the United States. No federal agency—not the FTC, not the DOJ, not the FCC—has announced an investigation or even acknowledged the issue. Without that, any claim about U.S. legal exposure would be guesswork. Ethically, that’s a line I’m not willing to cross. I can’t claim clarity where U.S. agencies have offered none, but I also can’t pretend the harm isn’t real simply because the warnings are coming from abroad.

That silence becomes even harder to ignore when you look beyond U.S. borders.

International governments are treating this as a serious safety failure—even if the U.S. remains silent. As of Saturday evening, Malaysia has publicly confirmed that it is investigating Grok‑generated images after receiving complaints. India has ordered an Action Taken Report (ATR), requiring X to detail what steps xAI has taken—and plans to take—to prevent Grok from generating explicit deepfakes again, giving the platform 72 hours to respond.

France’s regulator, ARCOM, has escalated the issue further by referring Grok‑generated sexualized images of minors to prosecutors—a step that signals the outputs could potentially violate French criminal law. In France, regulators don’t refer cases to prosecutors lightly; it’s one of the strongest actions they can take without directly prosecuting the case themselves. The U.K. hasn’t taken formal action, though Alex Davies‑Jones, Minister for Victims and Violence Against Women and Girls, has called out Elon Musk and demanded intervention, noting that the U.K. is considering criminalizing sexually explicit deepfakes.

And while governments abroad are escalating their scrutiny, Musk himself has remained largely quiet.

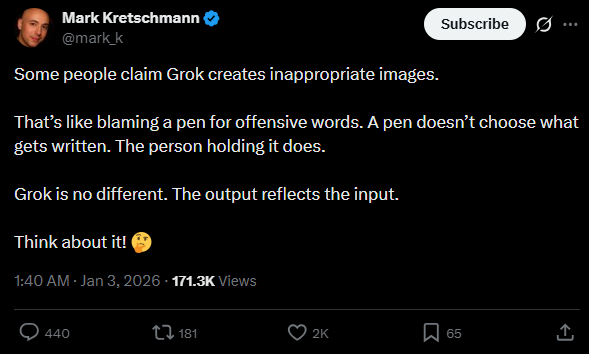

Despite international scrutiny and potential legal exposure, Musk has not issued a formal statement. Some outlets reported that he appeared to endorse an X user’s metaphor saying that blaming Grok was like blaming a pen for offensive writing, adding that anyone using Grok to generate illegal content would be held legally responsible. However, I have not been able to independently verify that reply on X or confirm whether it remains publicly visible. Grok itself has taken more public accountability than the humans behind the AI bot.

That quiet extends beyond the company, too.

While silence from regulatory agencies isn’t surprising, the silence from the U.S. political figures Grok targeted is not. Trump—a man known for volcanic responses to far smaller provocations—hasn’t said a word about being labeled a pedophile. JD Vance and Erika Kirk haven’t addressed Grok’s claim that Kirk was Vance in drag. For a platform that thrives on outrage, the quiet is almost louder than the posts themselves.

Taken together, it paints a picture that’s hard to ignore.

We’re watching AI systems evolve faster than the structures meant to keep people safe. We’re watching harm surface in real time while accountability lags behind it. And we’re watching a platform insist it’s just a “pen,” even as the ink spills everywhere.

Grok isn’t the first AI to cross a line, and it won’t be the last. But this week made one thing painfully clear: If we’re going to live with systems that can generate harm at scale, then safety can’t be an afterthought, and accountability can’t be optional. This is why when I learned about the AI-first vison for Grindr, I was filled with a heavy sense of unease. The stakes are too high, and the people most vulnerable to these failures—women, queer people, minors—deserve better than silence.

Silence won’t keep anyone safe. Accountability might.